- Published on

Multi-Model Apps and OpenRouter

- Authors

- Name

- Shlok Khemani

- @shloked_

The OpenAI debacle last month was peak tech drama. And while most of us were hooked to Twitter (yes, it still feels weird to call it X), tracking which OpenAI employee tweeted what, developers around the world, expecting a service outage in the company’s APIs, were scrambling to incorporate other models into their apps.

Amidst all the chaos, one thing became very obvious. Developers and users cannot rely on a small group of companies for access to AI. The technology, much like the internet, is important enough for us to ensure that we make the infrastructure that serves it as robust as possible; immune to individual points of failure.

We are on a clear trajectory towards a multi-model world.

In this piece, I’ll cover (in some more detail) the need for developers to make their apps multi-model and the challenges they face in doing so. Finally, I’ll talk about OpenRouter, a relatively under-the-radar but very important service that is working to solve some of these challenges.

Why Multi Model

Reliability

Large language models (LLMs) run on servers. These servers, like any servers, can have outages for several reasons, from power failures to cyber attacks to a lack of maintenance (sometimes because all employees threaten to join Microsoft!).

If the critical functions of a program rely on LLM calls, developers have to plan for scenarios where the LLM of their choice gives slow or no responses.

Different use cases

No two LLMs are the same - they differ in training data, size, architecture, safety alignment, and several other factors. They can also be fine-tuned to specialize in dedicated tasks. This means that each model is unique and serves particular use cases better than others.

GPT-4 is great for most general queries while Claude is better for very long ones. Neither are useful for real-time information, however. For that, you’ll have to make calls to Perplexity’s pplx models (trained on search results) or xAI’s upcoming APIs (trained on Twitter discourse).

For complex applications, developers might even want to integrate more than one model to serve different functions.

Let’s consider, for example, an LLM-based research assistant that allows users to upload PDF research papers and interact with them. For most queries, GPT-4 suffices. However, if the PDF is hundreds of pages, beyond GPT-4’s context window, they might want to use Claude. Now, say, the software detects that the uploaded paper is related to medicine, they might want to send queries to Google’s Med-PaLM, an LLM that specializes in medical questions. Or, if the user wants real-time data, maybe related papers published in the past month, queries to the pplx model would be most appropriate.

You get the gist. The most carefully designed AI apps will leverage the unique strengths of different LLMs to provide users with the best experience possible.

Cost

I’ve previously written about how the economics of AI apps are very different from traditional software. Each LLM call costs a non-trivial amount of money, depending on the size of the model and proportional to the length of the query and response. Moreover, these costs are in constant flux and can change in cases like:

The model provider increasing or decreasing costs: OpenAI recently announced a massive drop in prices for their GPT APIs. Many applications where GPT-4 might have earlier been too expensive could now use the more powerful model. Similarly, the pricing for every provider is very dynamic. And while we are currently in a race towards the bottom, prices are mostly decreasing. Once the industry matures, you can expect the winners of this race to ramp them up as they look to build sustainable businesses.

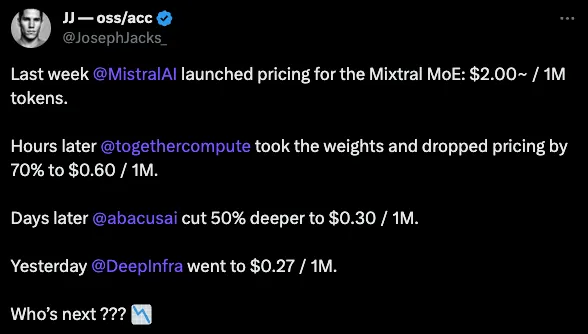

Newer models enabling similar capabilities for lower prices: LLM development is a fast-moving field with new models and advances in architecture happening almost daily. Mistral-7B, a recently released open-source model, for example, has the same capabilities as GPT-3.5, but at a fraction of the price.

This means that developers, to improve their unit economics, will constantly be on the lookout for cheaper models that can meet their use cases. On the flip side, in case a provider increases prices, they will look to switch models to preserve their margins.

Censorship

While it is important for companies to align LLMs to give safe responses and adhere to legal guidelines, they can sometimes take the process too far, hampering the model’s ability to perform certain tasks. The internet is filled with such instances, from LLMs refusing to generate violent stories, to only making jokes selectively, to not processing documents with certain content.

Not all of this is intentional. LLMs are, by nature, non-deterministic and unpredictable. We still don’t fully understand how they work. They may answer a query one way one day and a different way the other, sometimes even outright refusing to. This makes building applications over LLM tricky for developers, who strive for consistent user experiences.

All of this makes it important for developers to incorporate more than one model in their apps. If the preferred model refuses to answer a query, they can hope one of their backups will.

Challenges

Hopefully, I’ve made the need for developers to plan for making their apps multi-model clear. Doing so in practice, however, doesn’t come without its set of challenges.

API Management

Accessing LLMs requires developers to procure API keys from a provider and recharge them with credits. If they want their app to be multi-model, they have to repeat this process for every LLM they intend to integrate.

For example, if the primary model an app relies on is GPT-4 with Claude as a fail-safe, developers have to create and fund API keys with both providers, OpenAI and Anthropic. They then have to individually keep track of each API key’s usage and purchase more credits as and when needed. Of course, if an API key runs out of credits, the provider stops responding.

For simple applications that use no more than one or two models, individual key management and usage tracking might be feasible. For more complex ones that rely on many models and more complex logic, manual management is not scalable.

Pricing

The pricing for a closed-source model is set by its parent company and in most cases, it is difficult to find another provider with cheaper rates.

For open-source models, however, the weights are publicly available for anyone to run. This means that we have multiple providers, each with their hardware setups, competing to serve us these models. Naturally, this translates to constantly changing prices that depend on factors like the type of GPUs used, the geography of servers, and the scale of a provider’s operations.

For a developer, identifying the cheapest provider that can serve their models reliably is an important business decision. But it’s not easy to keep track of.

Syntax

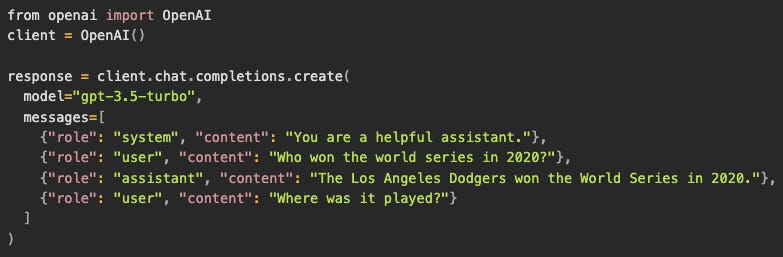

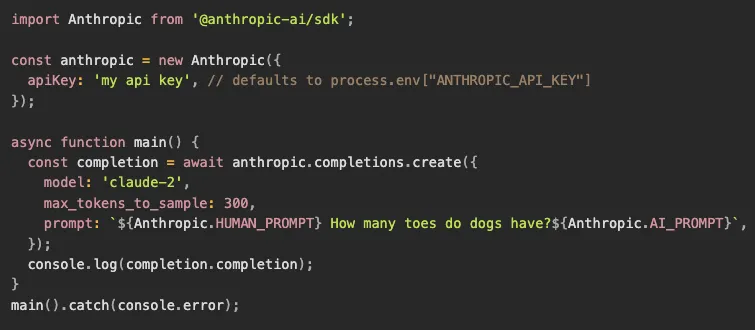

Each LLM provider has a different syntax to interact with their models.

Here’s OpenAI’s for a basic prompt:

Compare this to Claude’s:

While they are similar in some ways, they are very different in others.

Integrating a new LLM requires developers to familiarise themselves with the syntax and limitations of its API, and thoroughly test their prompts against them. At scale, with new models constantly releasing, the time spent in doing these tasks, that don’t directly relate to the core application itself, can add up.

Which model to use?

Because the pace of development in the LLM space is exponential right now, we have new models released every other day, by both startups to incumbents. Each of these has its strengths and weaknesses, and they are better suited to some tasks than others. Keeping track of these developments is akin to a full-time job, almost impossible for a developer who isn’t on Twitter all day.

So how do developers decide which model to use?

One indicator is a model’s score across benchmarks, standard metrics by which LLMs are evaluated. But while these are great for theoretical comparisons, they aren’t a reliable indicator of usefulness in real-world applications.

In a future that will have thousands of models, this problem is only going to get more complex.

Enter OpenRouter

Started by OpenSea co-founder and ex-CTO, Alex Atallah, OpenRouter brands itself as “a unified interface for LLMs”. In other words, they are a service that makes it easy for developers to make their apps multi-model, solving many of the challenges I’ve highlighted.

OpenRouter acts as an intermediary, a router, between developer LLMs requests and providers of the requested models. With a single standardized API, developers can easily switch between models and providers.

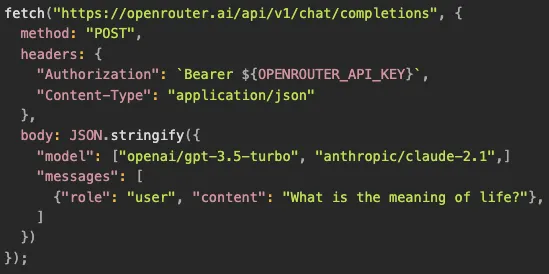

Here’s an OpenRouter API call for a developer to integrate both GPT-3.5 and Claude into their app:

Let’s break down what’s happening here.

The developer procures and funds a single API key from OpenRouter, instead of two separate ones from OpenAI and Anthropic

The prompt is first sent to GPT-3.5. If for some reason, the provider doesn’t respond or the model refuses to answer the question, the request is routed to Claude-2.1.

The developer uses the same syntax to interact with both models, even though they differ individually (as we’d seen earlier)

As of the time of writing, OpenRouter provides access to over 60 models, from closed-source ones from OpenAI, Google, and Anthropic to open-source ones from Meta, Mistral, and independent model creators. They are very quick to integrate newer, better models into their service. All of this, again, with a single API.

It’s clear how OpenRouter is solving two of the four challenges I mentioned - API management and Syntax. Let’s now look at the other two.

Costs

For closed-source models, OpenRouter matches the price set by model providers.

For open-source models, where independent providers compete over costs, OpenRouter automatically routes to the cheapest one. Developers can build with the assurance that they are not overpaying.

In fact, as of the time of writing, OpenRouter provides many of the top models for free!

Identifying the Best Model

OpenRouter has two features that aid developers in identifying the best model to serve their prompts and applications.

Auto mode

For a specific prompt, developers can set the model field in the API call to "openrouter/auto”. OpenRouter then analyzes the prompt (depending on factors like size, subject, and complexity) and routes it to the model that will best serve it.

Rankings

On their website, OpenRouter provides rankings, over different time frames, for:

the most used models

the top apps by token usage

Developers can use these insights, extracted from the wisdom of the crowds, to guide their model decisions.

Business Model

OpenRouter makes money by charging a fee at the time of API recharge.

For fiat payments, in addition to the fees of payment provider Stripe, OpenRouter charges 0.6% of the recharge + a $0.03 flat fee.

For crypto payments, they charge 4% of the recharge (in addition to 1% charged by Coinbase) and no flat fee.

It’s a simple pricing model that aligns the service’s incentives well with its developers. Suppose OpenRouter continues to provide developers with a simple user experience, a wide range of models, and competitive pricing. In that case, developers will spend more money to route their requests through the service, increasing revenue.

Conclusion

The trend towards a multi-modal world and the need for a service like OpenRouter becomes obvious when you look at this chart from their website, which maps the usage of different LLMs over time.

Apart from a steady uptick in usage, we can also appreciate how dynamic the current LLM landscape is, where, thanks to non-stop innovation, we’re seeing newer, better models week after week.

The value (and I’d say, genius) of OpenRouter lies in abstracting the complexities of this dynamic landscape from a developer with a simple, elegant API. This makes it easier for them to focus on building their specific LLM-based applications rather than figuring out the more general intricacies of different models and providers.

Today, OpenRouter provides access to less than 100 models. One year from now, this number could comfortably be in the thousands. As this unfolds, the company, as a gatekeeper in a multi-model world, may very well become one of the more lucrative businesses that emerge out of this AI wave.