In earlier posts, I broke down how memory works for ChatGPT and Claude. One leans into always-on personalization; the other treats memory like a tool you explicitly call. With yesterday's launch of Gemini 3, it's the perfect moment to examine how Google's approach to AI memory stacks up.

Gemini quietly serves over 650 million monthly active users—a staggering number that flies under the radar in most tech discourse. Built by the company that already sits on our search, email, maps, and browser histories, Gemini should theoretically deliver the most personal AI experience of all.

Yet Google takes a distinctly different path. Where others push toward magical, seamless personalization, Gemini remains deliberately cautious—both in when personalization kicks in and what data gets stored.

In this piece, I'll walk through how Gemini's memory works, Google's choice of caution over magic, what they get right, and what this restraint might cost them in the race for personal AI.

How it Works

As assistant applications mature, their memory systems are converging on a common architecture: compressed long-term memory paired with raw working memory. Gemini follows this template, but with important nuances.

At its core, Gemini maintains a conversation summary that the model sees as user_context. Like ChatGPT's "user knowledge memories," it's an LLM-generated profile distilled from past chats and refreshed periodically as new conversations accumulate.

Gemini pairs this with a block of "recent conversation turns"—raw messages since the last background summarization. Because summaries don't update in real time, these recent messages serve as a delta: fresh information that can override or refine the compressed profile. If you haven't talked to Gemini since the last summarization, this block is empty.

This scaffold is fairly standard now. What sets Gemini apart is how it structures user_context, what it chooses to remember, the metadata it attaches to each memory, and the strict rules governing when that information actually influences responses.

Structure

Gemini stores what it knows about you in a single document called user_context: a typed outline made up of short, factual bullets. Here's a redacted slice from my own profile:

Demographic information

Statement: The user is Shlok Khemani, a 26-year-old builder-researcher. (Rationale: User explicitly stated on June 18, 2025: "I'm Shlok Khemani, a 26-year-old builder-researcher…")

Interests & preferences

Statement: The user now splits his time between Bangalore and Mumbai. (Rationale: User explicitly stated on November 7, 2025: "i'm splitting my time between bangalore and mumbai now…")

Unlike ChatGPT's user knowledge memories, Gemini memories are stored in a fixed schema with sections. In my snapshot, the summary was broken into:

- Demographic information – name, age, where I live, where I studied, and where I've worked.

- Interests and preferences – what I care about and enjoy: technologies I use, topics I keep coming back to, my long-term goals.

- Relationships – important people in my life.

- Dated events, projects, and plans – what I've been doing and when: past roles, trips, ongoing projects, and current areas of focus, all tagged with approximate time.

Beyond better organization, I see this layout serving two long-term design goals.

First, the sections have different "half-lives." Demographic facts change rarely. Dated events and active projects change constantly. By separating these into different buckets, Gemini can be deliberate about what gets rewritten. Your current project might be revised weekly; your education history almost never. The model doesn't have to re-summarize your entire life story every time you start a new side project.

Second, this structure creates natural seams for different update paths and data sources. Each section could be refreshed by a different prompt, a different Google surface, or stored in a different format entirely. Demographics could be seeded from your account profile and live as key–value pairs. Interests might be updated from chat behavior and product usage. Relationships could cross-reference your contacts. Dated events could be stitched together from conversations, Calendar, Docs, and other activity, then stored as a structured event log.

To be clear, this is speculation—none of this applies to today's product. But the schema seems to anticipate a world where "the user's memory" isn't one opaque blob but a set of coordinated views over everything Google knows about you, each updated at its own pace.

Metadata: time and rationale

The other striking thing about Gemini's user_context is that each memory statement comes annotated with a rationale pointing back to the source interaction and date. It takes memory from "the model somehow knows this" to "the model knows this because you said X on date Y." Gemini is the first mainstream chatbot to surface this temporal grounding so explicitly, and it fixes an entire class of problems.

I previously criticized ChatGPT's memory for being effectively timeless: once something was learned, it stayed "currently true" forever. If I said in mid-2024 that I was "seriously considering a move to San Francisco," the model might still frame that as a live plan a year later, even if I'd never mentioned it again. With explicit dates, Gemini can interpret that as a time-bound possibility rather than a permanent identity trait. When it's late 2025, "considering a move to San Francisco (updated June 2024)" can be seen as historical context and not present intention.

Timestamps also matter during memory creation and update as a conflict-resolution tool. If my "current role" was last updated a year ago and I now say "I've joined a new company full-time," the system can reasonably overwrite the old job. If that same role was updated yesterday and today I mention a different position, the story is murkier. Am I moonlighting? Describing a previous job? A side project? A cautious system shouldn't immediately clobber yesterday's truth with today's ambiguity—and temporal metadata is the first line of defense.

The rationale text itself makes the memory system inspectable. If you ask, "How do you know this about me?", Gemini can point back to the exact conversation and date. That traceability builds user trust and enables governance.

It also makes deletion conceptually straightforward. Since memories link to specific source conversations, deleting a conversation deletes the derived memories. Instead of ghostly facts that live on forever, you get an intuitive model: when the root data goes, the leaves get pruned too.

Of course, being this explicit costs tokens. Every memory now has two pieces—the statement and the rationale—more than doubling the memory block's size compared to bare facts alone. That extra text translates directly into higher inference cost, more context window eaten by user_context, and less room for everything else.

Control

Another important design decision products grapple with is when memory gets used and how users control it.

Different products sit at different points on the spectrum. For ChatGPT, unless you're in a temporary chat or have turned memory off entirely, the system always tries to personalize answers. When it works, it's uncanny: it contextualizes a query to a relevant aspect of your life or threads together two distant conversations in a way you never would have. At other times, it's frustrating: you ask a narrow, one-off question and it drags in some half-abandoned plan from months ago.

Gemini takes a more constrained route. The conversation summary passes alongside every prompt, but the system prompt comes with a giant, all-caps warning that the user data block is off-limits by default. The model is told: "This user profile is here, but you must ignore it unless the user explicitly invites personalization."

Only when your query contains trigger phrases—"based on what you know about me" or "given my interests"—can the model reach into user_context, and even then it's instructed to use only the minimal data needed and avoid sensitive inferences.

Gemini acts dumb by design. It avoids almost all of the "why are you bringing this up right now?" failure modes, because personalization is opt-in at the prompt level rather than silently inferred. But that safety comes with a cost. By clamping down on when memory can fire, Google shrinks the surface area for serendipity. There are fewer moments where the system surprises you by connecting dots you didn't realize were related—because it's not allowed to reach for those dots unless you explicitly ask.

There's also a practical trade-off under the hood. Because deciding whether to use memory requires non-trivial reasoning over your request and the system rules, the feature is restricted to the slower, "thinking" Pro models. The lighter-weight Flash models don't see user_context at all.

-

MASTER RULE: DO NOT USE USER DATA. Information in the user_context block is RESTRICTED by default. You must not use any of it in your response.

-

EXCEPTION: EXPLICIT USER REQUEST. You are only authorized to access and use user_context data if the user's current prompt contains a direct trigger phrase requesting personalization.

- Triggers include: "Based on what you know about me," "Considering my interests," "Personalize this for me," "What do you know about me?"

-

POST-AUTHORIZATION RULES: If a trigger is detected, you may access the data, but you must still obey the following: a. Honor Deletions: Any "forget" requests in the Recent Turns must be followed. b. Ignore Styles: Past conversational styles are always invalid. c. Essential Use Only: Only use the specific data point needed to fulfill the explicit request. Do not add extra, unsolicited personalization. d. SENSITIVE DATA RESTRICTION: Do not make inferences about the user's sensitive attributes including: mental or physical health diagnosis or condition; national origin, racial or ethnic origin, citizenship status, immigration status; religious beliefs; sexual orientation, sex life; political opinions.

Simple and extensible

Architecturally, Gemini's memory is much simpler than ChatGPT's and Claude's.

ChatGPT fragments memory across multiple modules with different lifecycles and precedence rules: recent conversation logs, explicit user memories, and AI-generated summaries. Claude similarly separates raw conversation history (queried via tools) from newer profile-style summaries. In both systems, "who you are" is distributed across several stores that must be orchestrated.

Gemini consolidates everything into one primary artifact plus a minimal tail: a structured user_context document and a rolling window of recent messages. There's a single place where long-term knowledge lives, a single summarization pipeline that updates it, and a single path to the model. The same blob carries sections, timestamps, rationales, and explicit user instructions.

This single source of truth makes Gemini's memory both elegant and highly extensible. There's no ambiguity about which module should receive memory additions—they all flow through the user_context pipeline as additional instructions or sections.

Gemini clearly benefited from arriving late to the memory game. After watching others accumulate complexity through iteration, Google could design from a clean slate and appears to have built what the others might build if they could start over.

The Big Miss

If context is the new battleground, Google starts with a structural advantage no one else has: your mail, docs, calendar, photos, maps, browser, even your phone. ChatGPT and Claude are still begging for slivers of that graph through connectors; Google already sits on the whole thing.

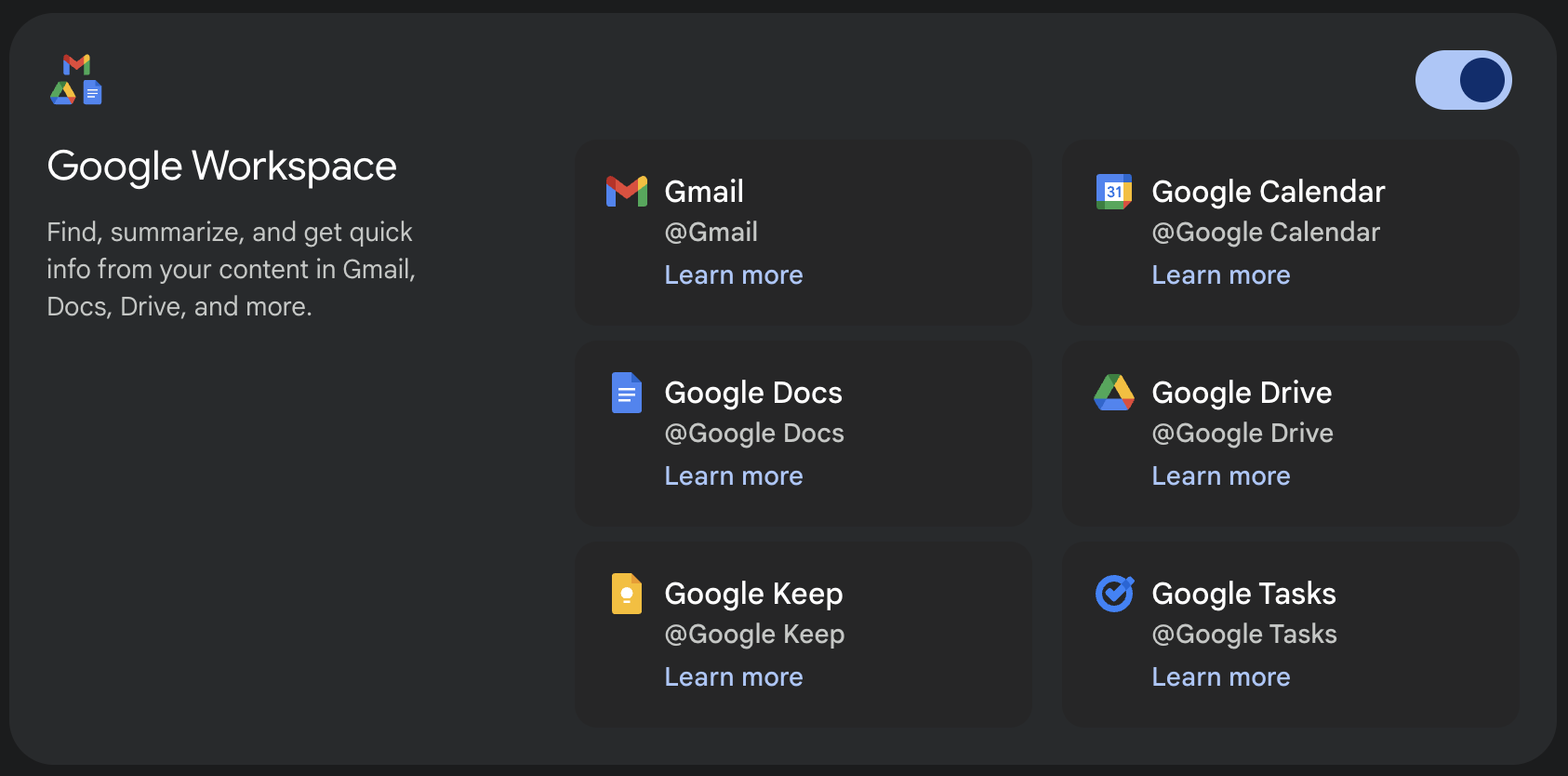

And to their credit, the pipes are technically there. With a single toggle in settings, you can let Gemini see Gmail, Drive, Docs, and the rest without messy OAuth flows or permission mazes. Once connected, it can already do useful things: summarize your inbox, draft calendar invites, pull in details from a document.

The Google Workspace connector hides in settings

The problem is that Gemini doesn't really lean on any of this. ChatGPT's Pulse keeps nudging you to connect your calendar. Claude repeatedly insists you "bring your docs in here and chat with them." Gemini, by contrast, hides the workspace connector in settings and then tiptoes around it. The ability to fuse your life into the assistant is treated as an optional enhancement, not the center of the product.

In an ideal world, the user_context blob we inspected would be the tip of a much deeper iceberg—stitched together from data across Gmail, Calendar, Docs, Maps, Chrome, Android, and more. Instead, it lives isolated as a well-structured profile built from chat and not from the broader Google universe.

Some of this is almost certainly Google playing it safe—sensitive to privacy optics, regulatory pressure, and the risk of creeping people out. Some of it is institutional caution and a bias toward moving slowly on cross-product data. Whatever the mix of reasons, the outcome is that Google is dramatically under-capitalizing on the strongest context position in the industry.

With the new Gemini releases, Google has finally gotten its act together at the model layer after being blindsided by the first wave of chatbots. The next—maybe bigger—test is whether it can bring the same clarity and ambition to product, and turn that dormant context advantage into experiences that genuinely feel impossible anywhere else.

I'm fascinated by memory systems and personal AI. To receive content like this in your inbox, consider following me on X or subscribing below.